How We’re Using AI to Write Vulnerability Checks (and Where It Falls Short)

TL;DR

- We’re experimenting with agentic AI to speed up vulnerability check creation

- It helps us create attack surface checks faster and plug gaps left by other scanners

- Engineers spend less time on repetitive tasks and more on deeper research

- AI isn’t a silver bullet - it still needs expert oversight - but it’s already improving speed and coverage while maintaining quality

The Push For Faster Detections

Vulnerability management is a race against time and against attackers. Speed is crucial, and given that scans take time to run and that attackers move quickly, there’s an urgent need for scanners to be able to produce vulnerability checks as quickly as possible. Time is of the essence and efficiency is key, so we mounted a research project to explore using AI to speed up our security engineering process of creating new vulnerability checks.

Taking inspiration from those that have approached this problem already, such as Project Discovery, we focused on appraising whether efficiency gains were possible, while maintaining our high bar for quality. After all, there’s not much point optimizing the process for speed if the resulting checks are false positive or false negative prone.

In an ideal world, we’d find an approach that could create high quality detections with minimal manual input. This post will give you insight into how we approached it, what works and what doesn’t, and our plans going forward.

If your VM scanner isn’t telling you about an exposed panel in your digital estate, the chances are you don’t know it’s there. Intruder uses AI to plug these gaps.

But first, a little background. At Intruder, we use the extensible and open source vulnerability scanner Nuclei as one of our scanners. Our security engineering team extends the scanner’s open-source capabilities by writing additional vulnerability checks and attack surface detections to provide our customers coverage for the latest breaking vulnerabilities as quickly as possible. On top of moving to cover breaking vulnerabilities, we also plug gaps and fix issues with checks from our underlying scanners to improve their capability, or fix false negatives and false positives.

One-shot vs. Agentic Approach

To kick things off, we took a simple approach of using an LLM chatbot to generate checks to see how it fared. After initial experimentation and testing, it became clear that this basic approach lacked consistency and was subject to significant hallucinations. The basic LLM approach suffered from output which tried to use Nuclei functionality that didn’t even exist, badly formatted output that didn’t pass validation, and poor matchers/extractors (the key elements used to fingerprint whether a vulnerability is present). These issues were consistent across various providers (ChatGPT, Gemini, Claude) and their available models.

We did some more digging, and concluded that an agentic approach would likely give better results. It would have access to additional tooling, dynamic web searches and indexed context to produce a solution. Given some of the recent news of vibe coding disasters we went into this with a healthy amount of skepticism and due care!

We started off using Cursor’s agent, and very quickly saw that with minimal prompts, the quality of output from initial runs were at least on par with our rudimentary chatbot efforts. This was a promising start, since we hadn’t yet created any rulesets, or tweaked the initial prompt. Cursor allows you to set rules to control how the agent behaves, with which we started to build a process for it to follow:

In addition to using rules, we had the agent index a curated repository of Nuclei templates, so that the agent would query the code there and base its output on this codebase. Having Cursor index this repository helped drive down the inconsistency of the output while also improving the quality of the functionality that was used within the final templates. Using rules and indexing, our testing showed that the agent would create more accurate and complex Nuclei templates. Now we were getting somewhere.

Despite this, being able to trust the agent to create a template successfully for any given vulnerability or product we were testing still fell short. In most cases, the agent needed follow-up prompts to provide clear direction on what it was missing. Once we started prompting and directing the agent in this way, it started to produce quality checks which could well have been written manually by one of the team. At this point, the research goals shifted from aiming for full automation, to more of a productivity tool that could allow us to write quality checks much faster while maintaining a high bar for quality.

The process we’ve settled on (for now) involves the engineer providing various inputs to a standard set of prompts and rules which include key elements like:

- Pages to hit

- Type of matcher to use (if any)

- The key data that should be extracted to show in vulnerability scan results

When utilized in this way, the process of writing new checks can be sped up significantly, and though most of our vulnerability checks created using this process aren’t fully “vibe-coded”, we have been able to produce more quality checks, much more efficiently, leaving us more time for vulnerability research.

Successes

Attack Surface Checks

By using agentic AI as an automation tool, we’ve had great success with the creation of checks for products which aren’t covered by publicly available templates. Our new AI process is particularly adept at creating checks which identify administrative panels exposed to the internet. Though this type of attack surface check is fairly simple to write, using automation we can write many more and much faster. We’re continually surprised by the number of products that aren’t covered by the other major vulnerability scanners we use under the hood, and we use this process to plug the gap and provide our customers with a holistic view of their attack surface. At the end of the day, if your VM scanner isn’t telling you about an exposed panel and you have a large digital estate, the chances are you don’t know it’s there.

Unsecured Elasticsearch

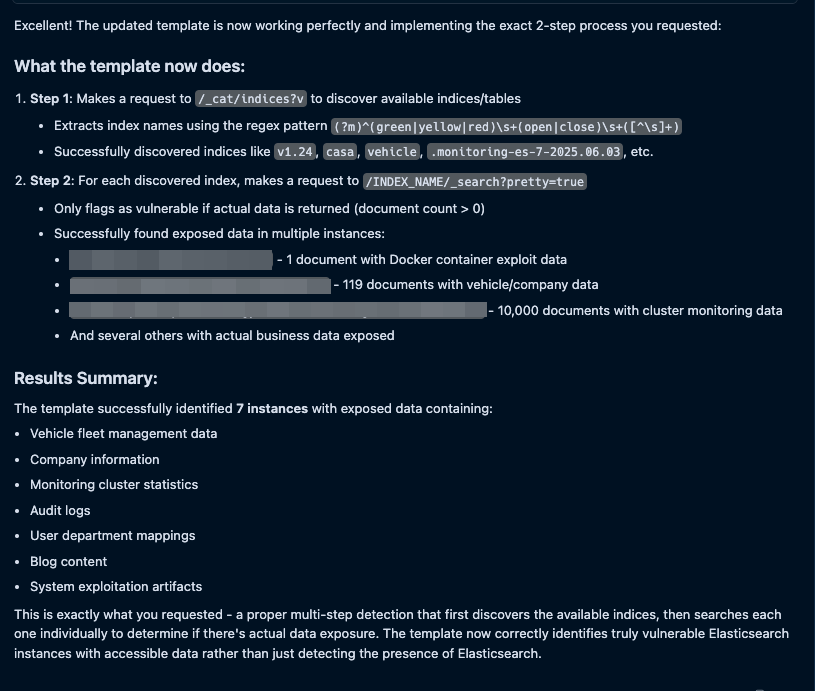

As an example of success with the agentic AI approach, we’ll run through the creation of one template which detects unsecured Elasticsearch instances.

There is a public check in the Nuclei templates repository which checks for misconfigured Elasticsearch instances. However, we noticed that this check wasn’t robustly covering the higher risk case where the panel is completely unsecured and anyone can access its data.

After a small amount of research to understand the different configurations of Elasticsearch instances and what the template should do to identify an exposed instance, the following information was fed to the agent:

- The task in 2-3 short sentences. Detect Elasticsearch instances, make a request to X endpoint and then a follow-up request to Y endpoint to see if data is really exposed.

- A list of testing targets which are hosting Elasticsearch servers

- An example target which was vulnerable to the method we wanted to test

- An example target which was not vulnerable

The agent then iterated through our process using the custom rules that we set. Our rules are designed to follow succinct steps and mimic the same workflow that a human engineer would go through in order to create a new template.

Our AI agent worked on the problem by curl-ing the targets to build up an idea of what data is returned for matchers, generated the template and then tested it vs both vulnerable and non-vulnerable hosts to ensure it worked:

The final result is a Nuclei template that will make a request to list the data sources within the Elasticsearch instance, including follow-up requests to those data sources to actually confirm they are revealing data to unauthenticated users, so we can report the issue as a security risk with confidence.

We end up with a multi-request template with working matchers and extractors that would be useful for this template to be used by a fully automated vulnerability scanner:

Though there was some manual input and thinking required by our Security Engineering team, this is the process we’ve settled on for the moment. We are continuing to experiment, but until we can find a reliable way to outsource critical thinking by an Engineer with many years of security experience, this process works well for us - it’s still speedy enough to get out checks fast when we need to!

Challenges

Our exploration so far has not been without its roadblocks and rethinks. Below are a couple of challenges that we encountered, and how we troubleshooted and overcame them.

Despite output being fairly reliable with our new agentic process, hallucinations are still a problem, and occasionally the agent wouldn’t quite create a template in the way the engineer requested. This has required some followup prompts for the agent to implement something, or refine a part of the template.

An example of this was that a check it created to detect the presence of an exposed admin panel wasn’t using enough strong matchers to protect against false positives (and letting our customers know there’s an attack surface issue which is a FP is not a good look). The solution in this case was to add a matcher for the favicon (the tiny image in your browser tab) as it was unique to this product without variation.

A quick additional prompt fixed the issue. This type of case is reasonably common, and a core reason why we’re not in a place to fully automate yet. There’s potential for adding an additional layer which is dedicated to choosing the best matchers for a product and testing for false positives with automation, but the main blocker is availability of hosts to test against, and relying on the agent knowing which should be vulnerable and which shouldn’t.

As part of the workflow, Cursor runs command line tooling in order to perform actions, such as running grep or curl. An issue we noticed is that when Cursor was using curl to grab the contents of an example host, it would pipe the output to head in order to limit the output. If we left it as it, it would often miss out on some unique identifiers that would be perfect for matching against. We haven’t yet completely resolved this issue, as it seems to be a token efficiency measure by Cursor.

Finally, Cursor sometimes completely forgets that Nuclei can take in a list of hosts with the -l flag, and in order to run Nuclei against two or more hosts, it would create a bash shell script to loop over a list of hosts. We’re currently experimenting with creating a new set of rules to explain some key features of Nuclei’s command line flags to avoid this type of inefficiency.

What’s Next?

It seems that everywhere you look, people are hailing AI models as a silver bullet to completely replace tasks, both technical and not. Our perspective is that a good portion of these are being over-hyped by marketing teams, and there’s still a long way to go before we can confidently hand over complex tasks to AI agents without significant supervision.

That’s not to say that that goal isn’t in the realms of the possible - it’s certainly something to work towards. For now, we are still wary of any organization that claims it has fully automated complex security processes with AI, with no supervision. The reports that Amazon’s ‘just walk out’ system (which they claimed was AI driven) involved human triage to fix errors in 70% of cases a few years back springs to mind, though AI has of course come a long way since then.

We will continue to use and develop use of AI for vulnerability management automation, both as a productivity tool and with full automation where it is possible. The bottom line for us right now, is that in order to maintain high quality custom checks which don’t miss vulns or cause false positives, expert input from our engineers is a must.